Table of Contents

(*.tps) Cache Files

Subscription Drop 3 introduces Frame Based Caching where each frame of an animation creates a separate file. Read More about it HERE

thinkingParticles incorporates a powerful particle simulation caching system that can help in tremendous ways to accelerate particle playback or speed up rendering in network situations.

Even though nearly everything seems possible - it is not. There are some restrictions in how simulation caches can be stacked up or recorded out of a DynamicSet. One possible pitfall one can easily run into, when using cached files, is the freedom thinkingParticles gives to modify time at any stage of an animation. The true non linear animation concept, first introduced to 3ds Max by thinkingParticles, can cause some issues when the recorded time (Sub Frame Sampling) is way different from the actual playback time. Optimized functions have been developed to reduce possible particle playback jitter due to different timings, however, it only goes so far.

Cached particle simulations can be “re-used” in dynamics simulations where the recorded particles can act as unyielding deflectors, furthermore, recorded particles can be used to spawn other particles, or they may be influenced by external force fields.

Caching a Particle System Simulation

The first step in working with particle cache files lies in creating one or multiple cache files. Recording cache files can be done in multiple places within thinkingParticles. The “cache files” discussed in this chapter are those created either by the MasterDynamic Set or by a DynamicSet.

Dynamics solvers like Bullet Physics, SC or PhysX are also able to create cache files to output and bake their physics simulations to a file. However, those files are only relevant for rigid body simulations and they will not cache complete particle systems like the MasterDynamic Set does, for example.

To start the recording of a MasterDynamic Set, the Record function within the main MasterDynamic Rollout Menu should be used. To learn more about the possible options and settings check the following link : Master Dynamic Rollout Menu

Recording complete particle system simulations is easy and straight forward. Keep in mind that cache files can be really big in file size. Depending on the content and amount of sub-samples used in a particle simulation, cache files can easily grow up to several Gigabytes in size! The file size of a cache file can get even bigger than this, when heavy meshes and changing particle shapes are used in a simulation.

Once a cache file exists, it can be used to playback the stored particle positions and shapes without the need to re-do a simulation over and over again. This is a great time saver and it guarantees the exact same results for a simulation, regardless of the amount of playbacks, or whether an animation is rendered in a network environment by one or many workstations. It is recommended to cache complete particle simulations before rendering them on a network of PCs. The “natural behavior” of 3ds Max and particle systems, in general, is their differential rendering approach.

Every PC in a network setup has to start simulating particle effects all over again with every frame it receives. If for example, Frame 1200 needs to be rendered by a network machine, this machine has to simulate frames 0 up to 1200 in order to render the frame to which it was assigned. To avoid such an unnecessary waste of simulation time - caching out the particle simulation to a file helps to eliminate this effect completely.

DynamicSet Caching

Before sending out the final rendering job to the farm, it is recommended to cache out the complete particle simulation in one go; this would be done through a MasterDynamic Set cache file. On the other hand, a setup of complex particle effects with a lot of rigid body dynamics in it, can be somehow challenging. To ease the process and to speed up the setup time of complex particle simulations, a “stacked” caching approach is supported by thinkingParticles.

The idea behind a stacked simulation cache approach is to cache out individual DynamicSets or dynamics solvers as soon as they are done. After each DynamicSet has been baked or cached to a file, it will not change anymore and it will not consume processing time, as the particles along with all possible shapes will be read from the hard disk. This method of “stacking” can be applied to each DynamicSet after another, until the final simulation is ready to be cached out.

Important

It is possible to use the MasterDynamic cache to write out already cached DynamicSets. However, be aware of the fact that the hard disk memory consumption might double or increases by the amount of each single DynamicSet cache file, because the resulting cache file will include all the simulations done previously, in every DynamicSet and this will end up in one big single “Master” cache file.

Caching out a DynamicSet to a file is a straight forward process. Within the DynamicSet Tree View, right click on the little icon to bring up the caching options for a DynamicSet. To learn more about this process follow this link: Right Click Menu

thinkingParticles is pretty flexible in handling and integrating cached particle simulations. However, there are some restrictions that need to be mentioned. When recording DynamicSets, only particles created within the same DynamicSet or particles created in levels below the one that is being recorded, are written out to a cache file! All other DynamicSets outside the hierarchy will be deactivated on recording - this means that all particles created outside are invisible to the recording.

Materials and tP Caches

Cached particle simulations can be re-used in other scenes or even become instanced multiple times in a scene. Very often materials need to be changed during the process of finalizing a project. It would be really a drawback in the workflow, if every time a material changes, the simulation has to be re-calculated and written out to a cache file. This is why thinkingParticles separates the material information from the actual simulation data.

When a cache file is written to the hard drive a standard 3ds Max material library file is saved with it, at the same time. The simulation cache and the material library file use the same file name; this is how thinkingParticles detects which material library file belongs to the cached DynamicSet.

The aforementioned approach enables the texture artists to keep on working on the shaders and textures while at the same time the FX artists can still work on the rigid body or particle dynamics.

thinkingParticles saves out standard 3ds Max material library files (*.mat); those mat files can be loaded back into 3ds Max and their materials can be easily modified. There are some important restrictions about what can be changed or modified within the materials, because the saved out materials belong to a specific DynamicSet and each material is matched to a particle or mesh.

Important Everything in a saved DynamicSet material my be modified (color, textures, shaders …) except the amount of sub materials, or their order! If you want to be able to add sub materials later, you must pre-assign empty sub materials to the object in the first place - before caching out the simulation. This would give you the advantage of being able to add new materials to an object afterwards, without breaking the simulation cache.

Things you should know

Simulation caches are saved out with the exact same time settings with which they are created. If, for example, a DynamicSet cache has been recorded with 30 sub-frame samples and will be “re-used” in a scene that has 90 or 120 sub-frame samples, the resulting particle simulation might result in a jittery look.

As mentioned above, for a final rendering job the MasterDynamic Set cache is the preferred method for network rendering, especially on complex scenes with motion blur. A key feature of the MasterDynamic Set cache is that it allows immediate access to a single frame in time - including going backwards, which is important to calculate motion blur. DynamicSet caches can not do this, as they have to be evaluated every time from start to the current frame.

Empty thinkingParticles Scenes

Cached particle simulation files can be reused in many ways. One (obvious) use is to cache out a complex particle simulation to save the time of re-simulation, whenever the frame slider changes. Another more advanced use of cache files would be to use them as instances and re-timing them. Once a complex simulation is written to a cache file it can be reused in any other scene, or even just played back in an empty thinkingParticles scene.

This is how it works:

After creating a pair of nested DynamicSets, the lowest level DynamicSet reads in the cache file (with a right click on the icon). When the cache file is active and loaded it will play back at its original place in space. Moving the position of the simulation playback is pretty easy and straight forward.

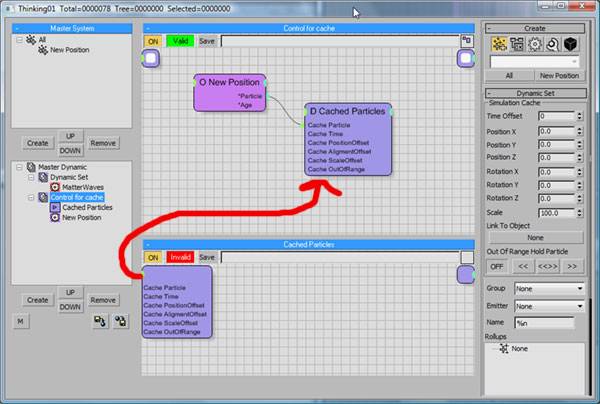

The DynamicSet, which is responsible for the playback of the cache file, requires some inputs enabled in order to allow full control of placement and playback through wiring operations.

Click the upper left corner of the DynamicSet as shown in the illustration above. This will give you all of the possible inputs for this DynamicSet. Enable one or many inputs for this DynamicSet.

Every input will automatically show up one level up in the hierarchy, from there the data may be manipulated by any means of wiring operators to those ports.

To automatically set the position and instance of a simulation cache, you just need to connect a particle group Particle output to the Cache Particle input of a dynamic set. Such a wire setup will automatically spawn multiple instances of the cache playback, based on the particle position of another particle system.

In the illustration above - 2 nested DynamicSets are shown; one is called: “Control for Cache” which is on top in the hierarchy level and one (down below) is called: “Cached Particles”. The arrow indicates that a DynamicSet, which is found one level down below, is able to expose its parameters upwards in the hierarchy.

©2024, cebas Visual Technology Inc.